Automatic Modulation Classifer

Major Project - Identifying the Modulation Type of a Signal

Please refer to the entire thesis by clicking on the grey PDF button on the right.

Abstract

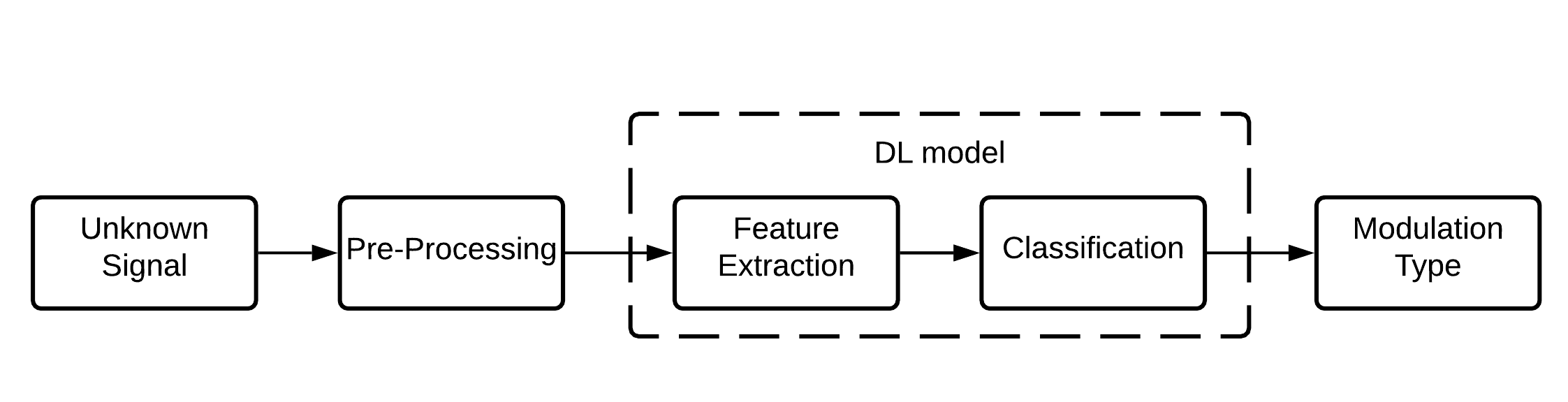

Methodology

We used multiple methodologies:

CNN-LSTM based techinique

CNN-LSTM model was found to be best.

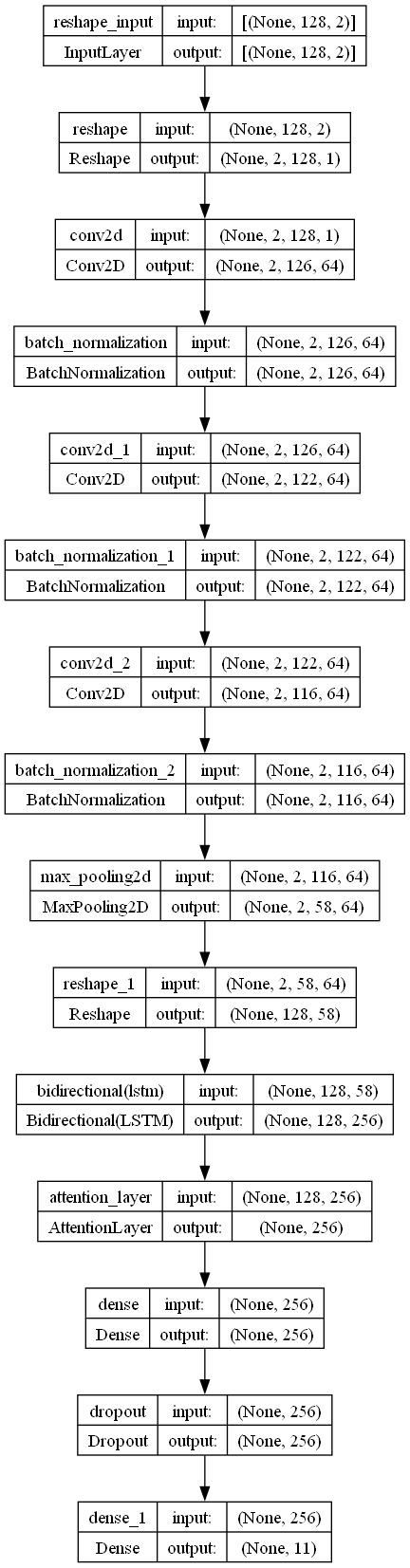

Key Features of the Model

-

Multi-Scale Convolutional Layers: Utilizes convolutional layers with different kernel sizes to capture features at various scales, crucial for understanding diverse characteristics of radio signals.

-

Regularization Techniques (L1/L2): Implements L1 and L2 regularization to prevent overfitting, encouraging model simplicity and parameter smoothness.

-

Batch Normalization: Normalizes the activations of each layer, aiding in faster training convergence and providing regularization.

-

Max Pooling Layers: Reduces the spatial dimensions of the input, cutting down on the number of parameters and computational load, which is essential for controlling overfitting.

-

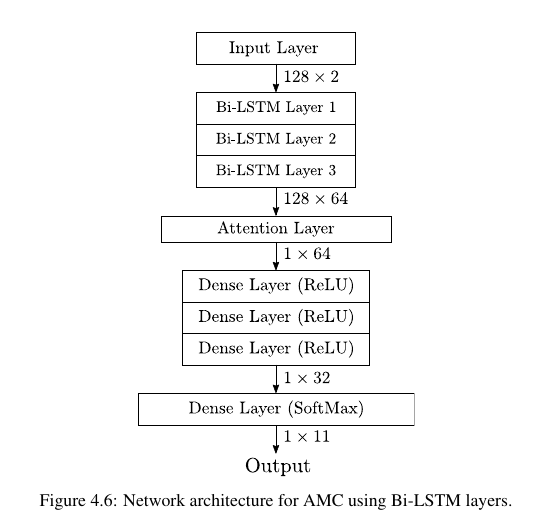

Bidirectional LSTM with Attention Mechanism: Processes data in both directions with a Bidirectional LSTM, capturing dependencies throughout the sequence. The Attention mechanism enables the model to focus on the most relevant parts of the input sequence for accurate predictions.

-

Dropout Layer: Introduces a dropout layer to randomly deactivate input units during training, further preventing overfitting.

-

Dense and Output Layers: Incorporates Dense layers, especially with a high neuron count, to learn complex patterns, and uses a softmax activation in the final layer for effective multi-class classification.

-

Optimization and Metrics: Employs the Adam optimizer for adaptive learning rate adjustments, with categorical crossentropy as the loss function and accuracy as the performance metric.

This model represents a comprehensive approach to tackle the challenges in radio signal modulation recognition, offering a robust solution for accurately classifying different types of radio signal modulations, a task of immense significance in wireless communications.

OTHER METHODS

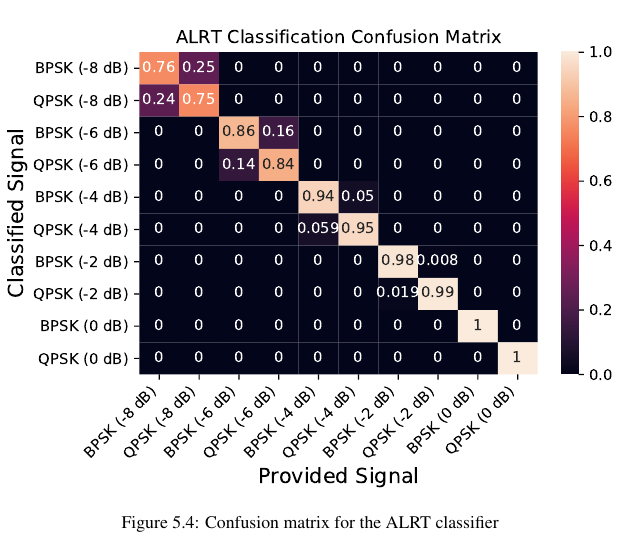

- Likelihood-Based Classifiers: Traditional approach focusing on probabilistic models.

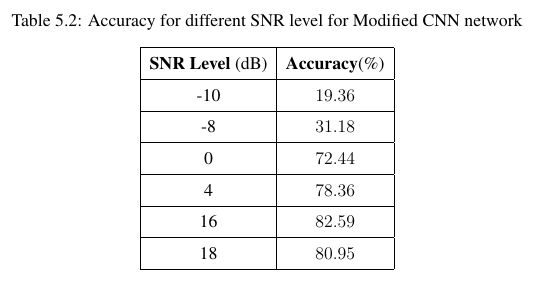

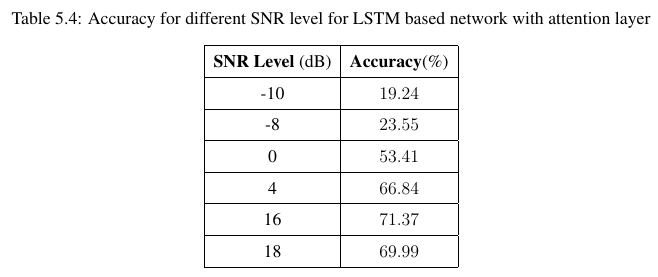

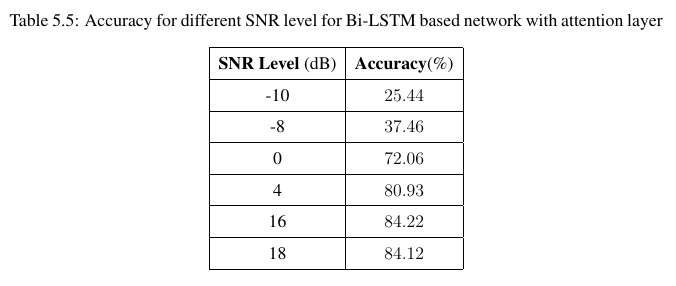

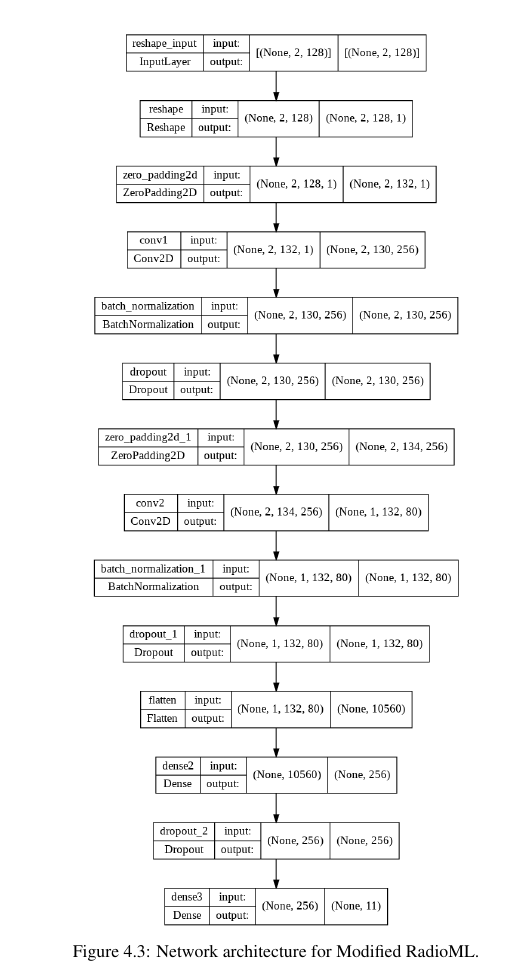

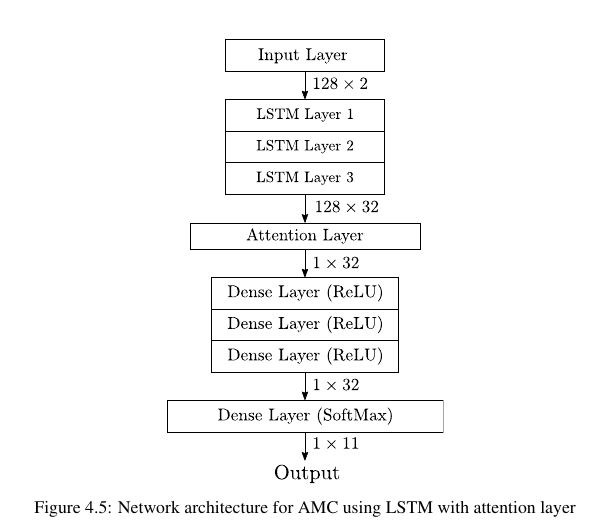

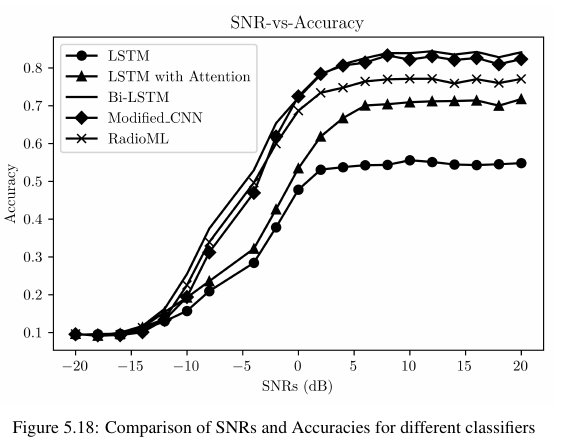

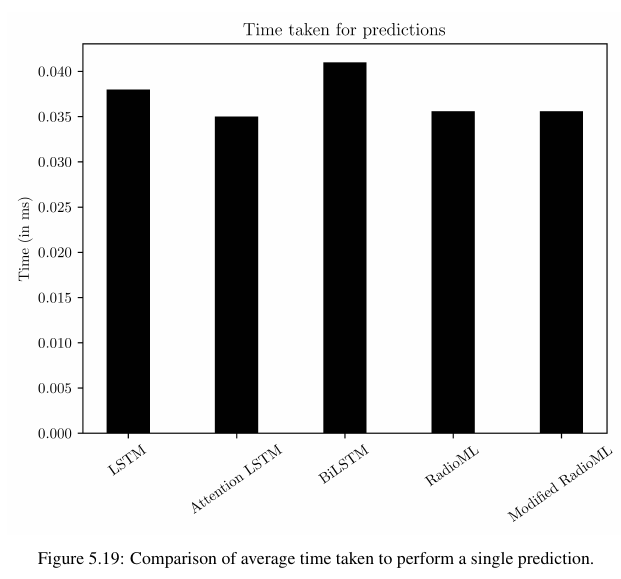

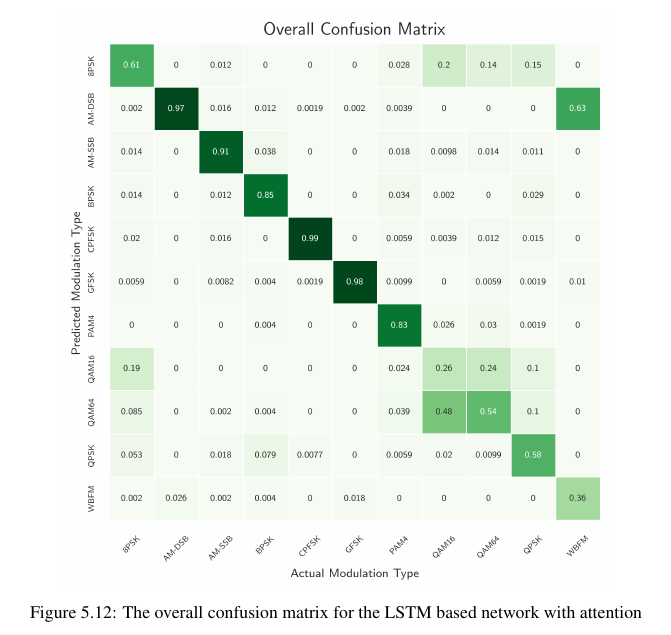

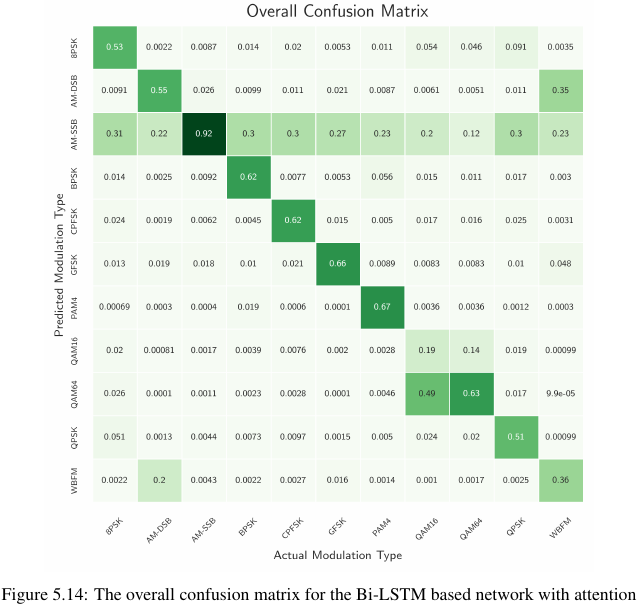

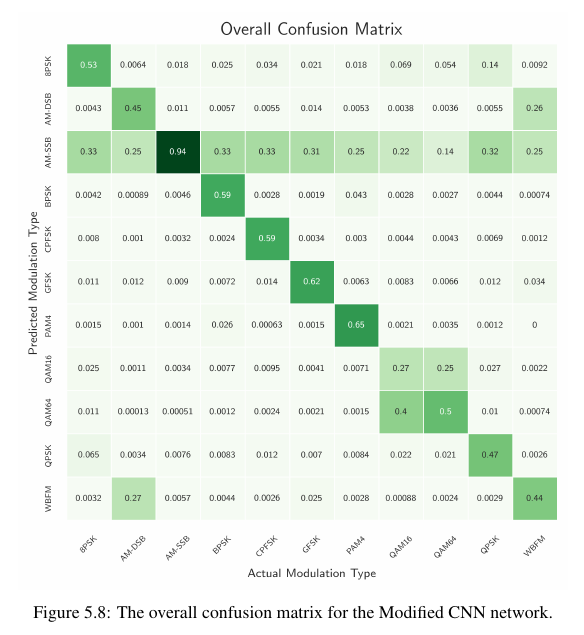

- Other Deep Learning Techniques: Different neural network architectures were used. This includes a modified Convolutional Neural Network (ConvNet), and Long Short-Term Memory (LSTM) units enhanced by an attention layer. Additionally, Bidirectional Long Short-Term Memory (Bi-LSTM) for efficient and comprehensive feature extraction.

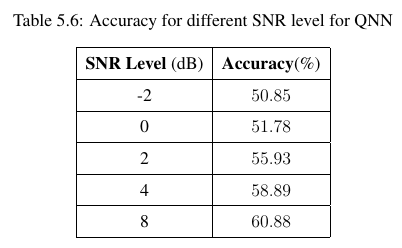

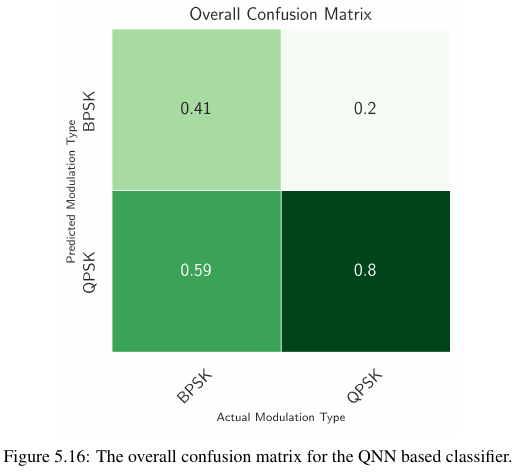

- Quantum Neural Network (QNN): Basic use of QNN

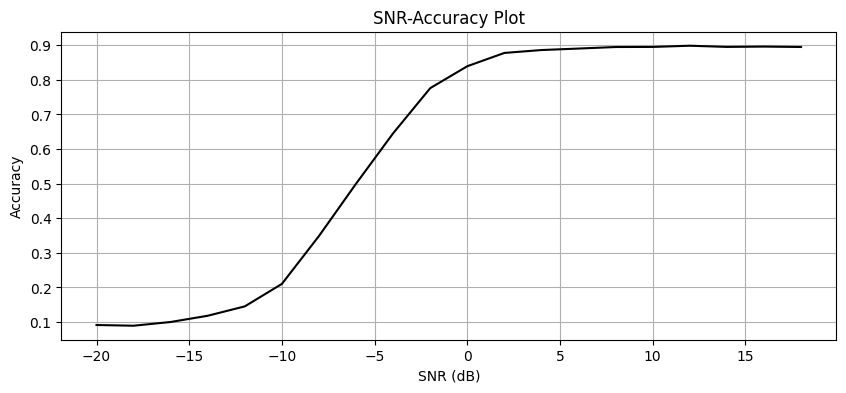

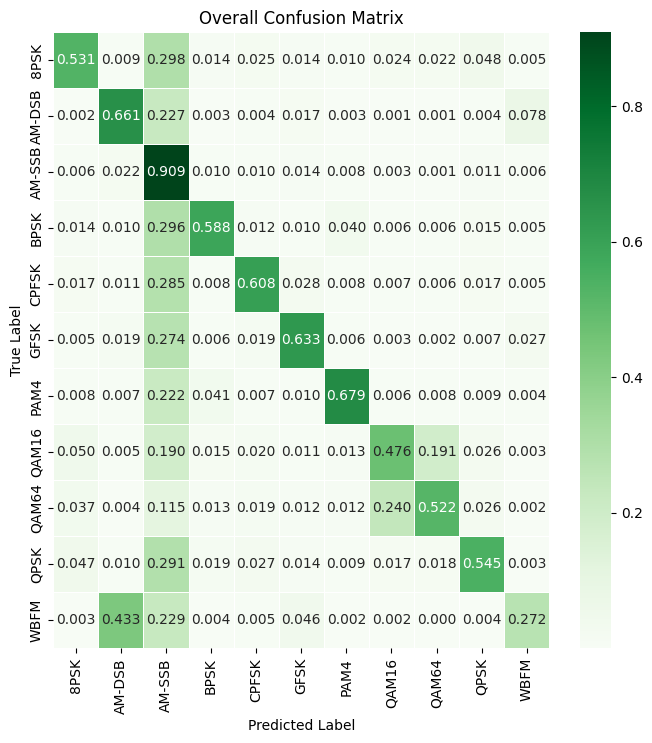

Results

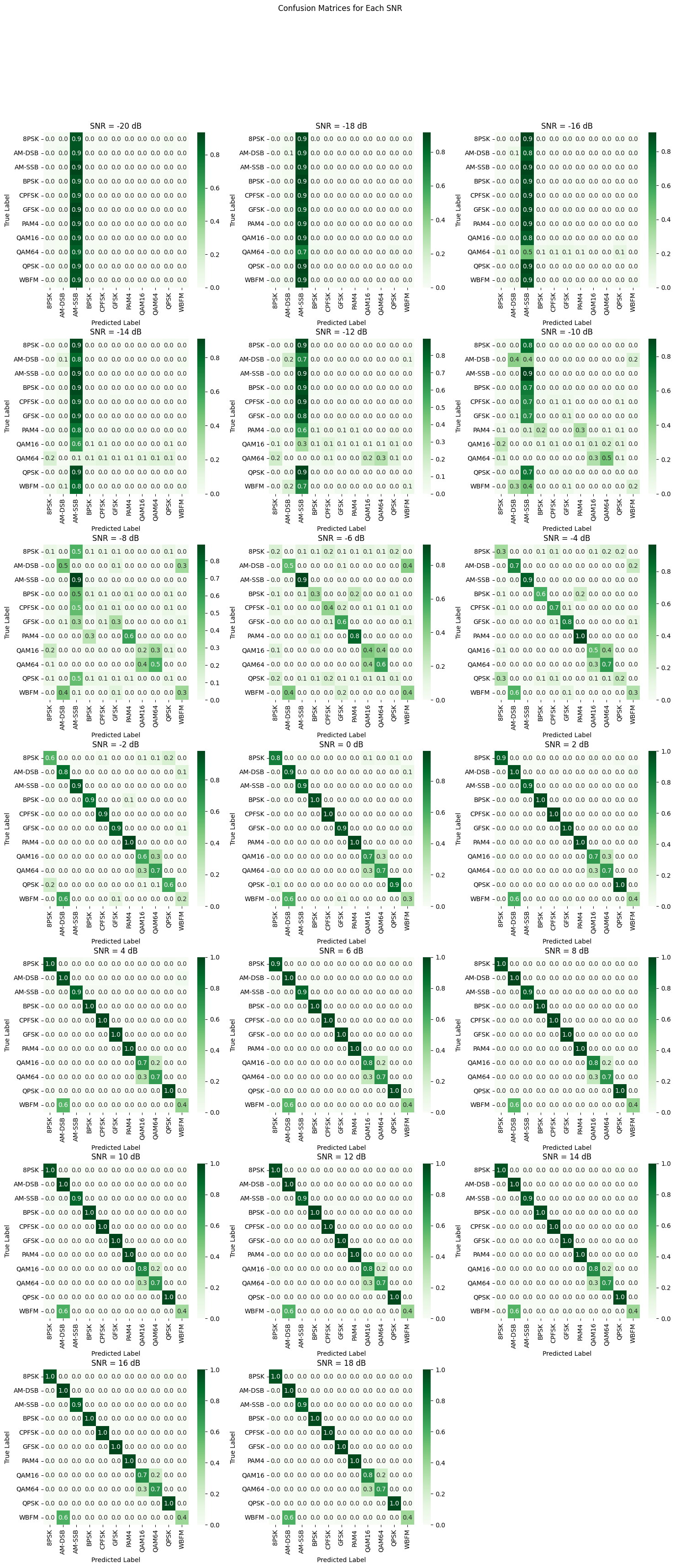

Confusion matrix

Accuracy